SEEKING FOUNDERS & TECH LEADERS

SEEKING FOUNDERS & TECH LEADERS VIEW MVL OPPORTUNITIES

VIEW MVL OPPORTUNITIES

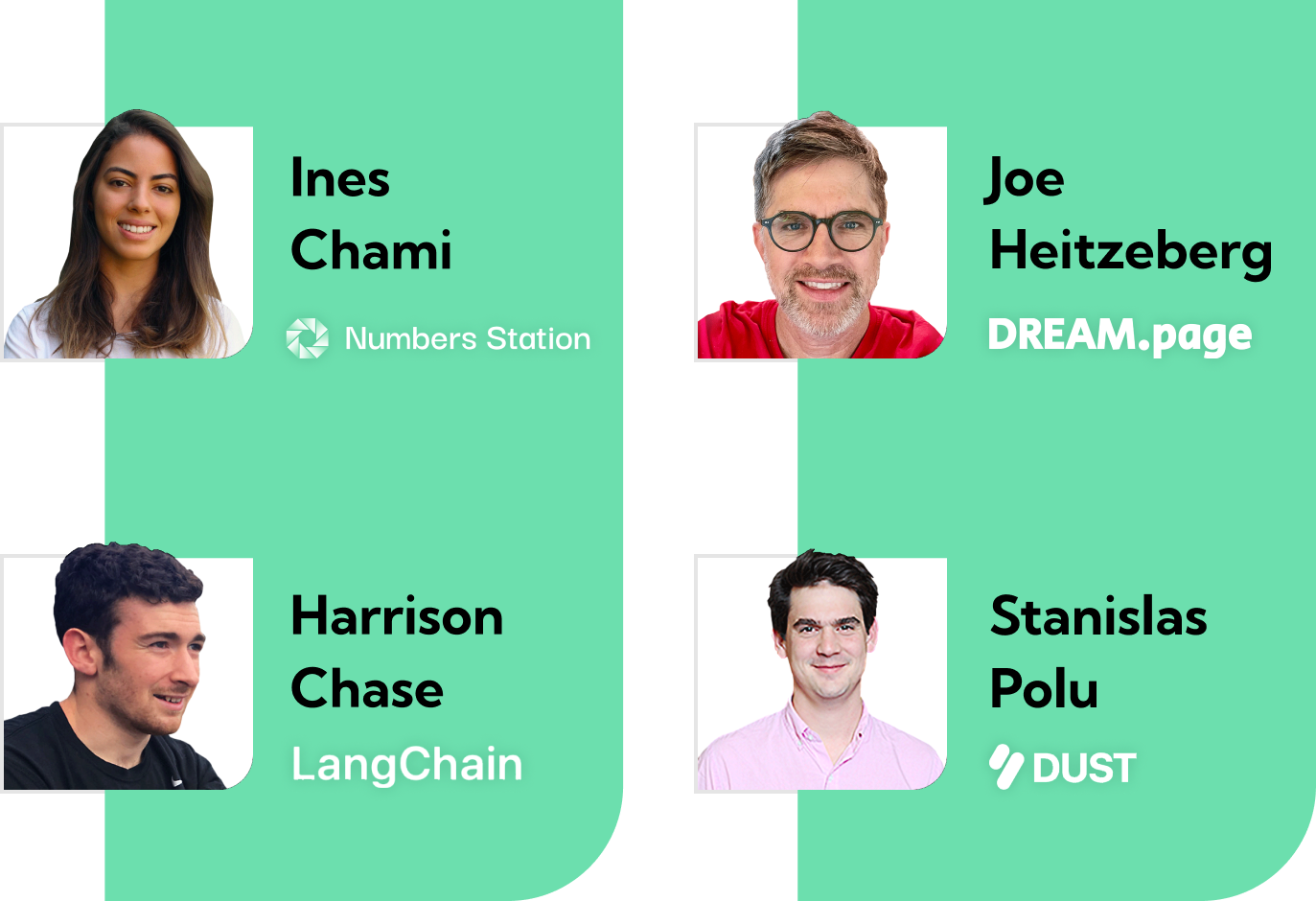

On Day 3 of Launchable: Foundation Models, moderator Joe Heitzeberg and speakers Harrison Chase, Ines Chami, and Stanislas Polu joined together to discuss prompt engineering, the future of models, and the complexity of using and fine tuning models.

Heitzeberg is the founder and builder of a variety of startup companies. Chase is the creator of LangChain, Chami is one of the founders of Numbers Station, and Polu worked for OpenAI before creating dust.tt.

The first topic that the panelists spoke about was prompt engineering. Polu spoke about how at the core it is trying to encode a task to a model, and making decisions with the data and eventually chaining operations. Chase agreed, saying it is about constructing the prompt in an interesting way and pulling things in but also using outputs creatively, parsing them and feeding them downstream.

The next focus was on the state of models and their future trajectory. Polu recommended using Davinci because of how quickly the landscape is changing.

“I think we are right now at a very special moment because we'll see a communitization of exactly the right size of model, and there'll be a tailwind, making it better,” Polu said. “ I'm not sure we need GPT 5 to change the world, we can just do it today.”

The topic then shifted to open source models. Chami said that there is an exciting trend of more open source models being produced, and that in the early days of production when there isn't a product market fit open source models are a great resource to use. They can iterate quickly early on, but as prompting and prompt engineering narrow they will hit a ceiling.

Chami also recommends that when it does hit a ceiling, to play with prompt engineering and different variations of the prompt, as well as paring the output and making sure it is valid code.

Polu then brought up that expanding computes could up the quality of outputs but at a cost. He brought up the Entropy paper that says models are calibrated. This means that if a model makes a prediction, the user can go back with a slightly different prompt and ask if the model thinks the prediction is correct or not, which will be a representation of the probability of a correct prediction.

Chase described the complexity in constructing prompts, saying that chains can make it more complicated so it is important to understand where something is going wrong to troubleshoot it.

In terms of regression testing, Chase said that compiling examples is step one, and to get data sets and run them systematically. He spoke of work coming out of Antropic about using models to judge other models, that there is a lot of value in looking at them manually but it could work well enough to judge them in a qualitative way.

On where the moats are in a business, as well as how to approach building a moat, Chami said the moat is about personalization and understanding how people are using models and where they are failing. She said it’s about understanding where to improve and gathering feedback from the user and using it to improve the model overtime. She also touched on how finetuning an overarching model will be harder because while there are some tricks around prompting there is a ceiling. This is where a shift from black box to open model can help, because investing in fine tuning is helpful in business, mentioning that it could be good to host open source models as well so you can train them.

Polu assured the audience that builders shouldn’t be scared of better models, as the real moat is the products being at a point of use and being great at executing their tasks.

In terms of the rumors of GPT 4, Polu said that it will be a better model. He stated that it will be much more powerful, and that there is a possibility that the team found a potential more powerful unlock since his time working on it.

The panelists then spoke about what they find to be the most exciting uses for models. Chase thinks that it is the personal assistants being created and their way to understand human interactions and human intent. Polu believes the most exciting uses are those that help individuals save a bit of time throughout the day, so that they can then be more productive in other areas. Heitzeberg touched on embeddings and vector searches, speaking about the ability to index data to do semantic searches and then feed that to prompts.

The panelists were asked about what to look at as builders graduate beyond a demo, and what the challenges can be to reach a production scale.

Chami described how it can be easy to get quality, but to run a model on a lot of data has the potential to be infeasible so integrating is where the big challenge is, especially depending on the vertical and industry as well as regulation. She also touched again on fine tuning, saying she’d love to see small open source models that are good at zero shot decision making.

The panelists reiterated that everything is improving within the world of AI, and while open source is a bit behind, the general trend is upwards.

Polu advised builders to experiment and explore to figure out the best UX. For example, he put a paywall on his product to parse out users that were truly interested, which then helped him get higher quality feedback on the product.

Finally, the team touched on techniques to carry over context in queries in public APIs. Chase brought up the link chain concept of memory, and how some models such as ChatGPT may just pass information back into the prompt while summarizing the most recent lines of conversation.

“I would say it's plain just reading the past and you know, it's like a long table and when you fill the table stuff starts falling off the table and it just forgets about it,” Polu said.

We are with our founders from day one, for the long run.